Facebook recently introduced a free, open-source library for training deep learning models with differential privacy called Opacus. This new tool is designed for simplicity, flexibility, and speed. It offers a simple and user-friendly API, which enables machine learning practitioners to make a training pipeline private by adding as little as two lines to their code.

Check out the source code of Opacus here.

Introducing Opacus

Over the years, differential privacy has emerged as the leading notion of privacy for statistical analyses. It allows performing complex computation tasks over large datasets while withholding information about individual data points.

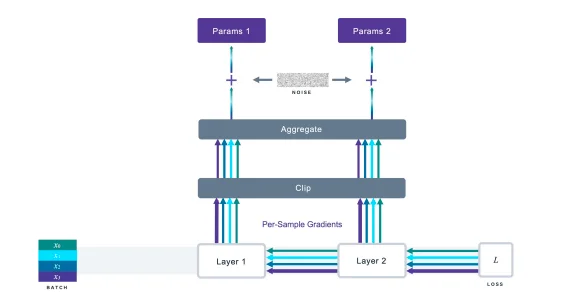

Differentially private stochastic gradient descent (DP-SGD), which is a modification of SGD, ensures differential privacy on every model parameters update; instead of computing the average gradient over a batch of samples, a DP-SGD implementation computes per-sample gradients, clients their norm, aggregates them into the batch gradient and adds Gaussian noise.

The image below depicts the representation of the DP-SGD algorithm, where the single-coloured lines represent per-sample gradients, the width of the lines shows their respective norms, and the multi-coloured lines show the aggregated gradients.

Download our Mobile App

![]()

![]()

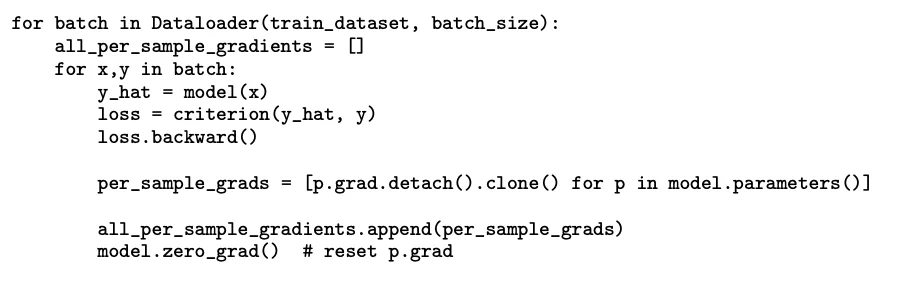

However, deep learning frameworks such as TensorFlow or PyTorch do not expose intermediate computations, including per-sample gradients, mainly for efficiency. As a result, the users only have access to the gradients averaged over a batch. Therefore, a simple way to implement DP-SGD is to separate each batch into ‘micro-batches of size one’ and compute the gradients on these micro-batches, clip, and add noise.

Here is a code snippet to yield the per-sample gradients through micro batching:

While ‘micro-batch’ or ‘micro-batching’ does yield correct per-sample gradients, it can be very slow in practice due to the underutilisation of hardware accelerators like GPUs and TPUs optimised for batched, data-parallel computations. That is where Opacus comes into play, where it implements performance-improving vectorised computation instead of ‘micro-batching’.

Here are some of the design principles and key features of Opacus:

- Simplicity: Opacus offers a compact API that is easy to use for researchers and engineers. In other words, users need not know the details of DP-SGD to train their ML models with differential privacy.

- Flexibility: Opacus supports rapid prototyping by users proficient in PyTorch and Python.

- Speed: Opacus seeks to minimise the performance overhead of DP-SGD by supporting vectorised computation.

Besides this, other key features include privacy accounting, model validation, Poisson sampling, vectorised computation, virtual steps, custom layers and secure random number generation.

Privacy accounting: Here, Opacus provides out-of-the-box privacy tracking with an accountant based on Rényi Differential Privacy. It keeps a check of how much privacy budget is spent at any point in time, thereby enabling early stopping and real-time monitoring.

Model validation: Before training a model, Opacus validates that the model is compatible with DP-SGD.

Poisson sampling: Opacus supports uniform sampling of batches (aka Poisson sampling). Meaning, each data point is independently added to the batch with a probability equal to the sampling rate.

Vectorised computation: Opacus makes efficient use of hardware accelerators like GPUs, TPUs, etc.

Virtual steps: To maximise the usage of all available memory, Opacus provides an option to decouple physical batch size and logical batch size.

Custom layers: Opacus is flexible as it supports various layers, including convolutions, LSTMs, multi-head attention, normalisation, and embedding layers. When using a custom PyTorch layer, users can provide a method to calculate per-sample gradients for that layer and register it with a simple decorator provided by Opacus.

Secure random number generation: Opacus offers a cryptographically safe (but slower) pseudorandom number generator (CSPRNG) for security-critical code.

Other Differential Privacy Learning Libraries

Besides Opacus, other differential privacy learning libraries include PyVacy and TensorFlow Privacy. These two frameworks provide implementations of DP-SGD for PyTorch and TensorFlow. Another framework includes BackPACK for DP-SGD, which exploits Jacobians for efficiency. It currently supports only fully connected or convolutional layers and several activation layers. However, recurrent and residual layers are not yet supported.

Wrapping up

As a PyTorch library for training deep learning models with differential privacy, Opacus design aims to provide simplicity, flexibility, and speed. Currently, it is maintained as an open-source project, supported by the privacy-preserving machine learning team at Facebook. In the future, the team is looking to add several extensions and upgrades, including flexibility for custom components, efficiency improvements, and improved integration with the PyTorch community via projects like PyTorch Lightning.