Diffprivlib is a general-purpose library for experimenting with, investigating and developing applications in, differential privacy.

Use diffprivlib if you are looking to:

- Experiment with differential privacy

- Explore the impact of differential privacy on machine learning accuracy using classification and clustering models

- Build your own differential privacy applications, using our extensive collection of mechanisms

Diffprivlib supports Python versions 3.8 to 3.11.

We're using the Iris dataset, so let's load it and perform an 80/20 train/test split.

from sklearn import datasets from sklearn.model_selection import train_test_split dataset = datasets.load_iris() X_train, X_test, y_train, y_test = train_test_split(dataset.data, dataset.target, test_size=0.2)

sklearndiffprivlib.models.GaussianNBboundsepsilonGaussianNB(epsilon=0.1)epsilon = 1.0from diffprivlib.models import GaussianNB clf = GaussianNB() clf.fit(X_train, y_train)

We can now classify unseen examples, knowing that the trained model is differentially private and preserves the privacy of the 'individuals' in the training set (flowers are entitled to their privacy too!).

clf.predict(X_test)

.fit()print("Test accuracy: %f" % clf.score(X_test, y_test))

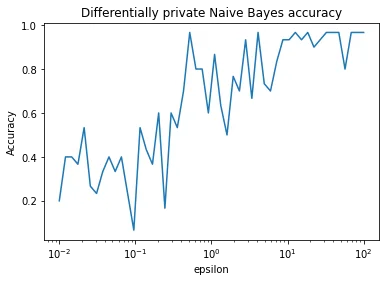

epsilonmatplotlibimport numpy as np

import matplotlib.pyplot as plt

epsilons = np.logspace(-2, 2, 50)

bounds = ([4.3, 2.0, 1.1, 0.1], [7.9, 4.4, 6.9, 2.5])

accuracy = list()

for epsilon in epsilons:

clf = GaussianNB(bounds=bounds, epsilon=epsilon)

clf.fit(X_train, y_train)

accuracy.append(clf.score(X_test, y_test))

plt.semilogx(epsilons, accuracy)

plt.title("Differentially private Naive Bayes accuracy")

plt.xlabel("epsilon")

plt.ylabel("Accuracy")

plt.show()

Congratulations, you've completed your first differentially private machine learning task with the Differential Privacy Library! Check out more examples in the notebooks directory, or dive straight in.

Contents

Diffprivlib is comprised of four major components:

BudgetAccountantSetup

pippippip3pip install diffprivlib

Manual installation

For the most recent version of the library, either download the source code or clone the repository in your directory of choice:

git clone https://github.com/IBM/differential-privacy-library

diffprivlibpython3 -m pip install .pip install .

pytestpytestpytest

Citing diffprivlib

If you use diffprivlib for research, please consider citing the following reference paper:

@article{diffprivlib,

title={Diffprivlib: the {IBM} differential privacy library},

author={Holohan, Naoise and Braghin, Stefano and Mac Aonghusa, P{\'o}l and Levacher, Killian},

year={2019},

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

volume = {1907.02444 [cs.CR]},

primaryClass = "cs.CR",

month = jul

}

References

- Holohan, N., Antonatos, S., Braghin, S. and Mac Aonghusa, P., 2018. The Bounded Laplace Mechanism in Differential privacy. arXiv preprint arXiv:1808.10410.

- Holohan, N., Braghin, S., Mac Aonghusa, P. and Levacher, K., 2019. Diffprivlib: the IBM Differential Privacy Library. arXiv preprint arXiv:1907.02444.

- Ludwig, H., Baracaldo, N., Thomas, G., Zhou, Y., Anwar, A., Rajamoni, S., Ong, Y., Radhakrishnan, J., Verma, A., Sinn, M. and Purcell, M., 2020. IBM Federated Learning: an Enterprise Framework White Paper v0. 1. arXiv preprint arXiv:2007.10987.

- Holohan, N. and Braghin, S., 2021. Secure Random Sampling in Differential Privacy. In Computer Security–ESORICS 2021: 26th European Symposium on Research in Computer Security, Darmstadt, Germany, October 4–8, 2021, Proceedings, Part II 26 (pp. 523-542). Springer International Publishing.