runtime调度器pgm

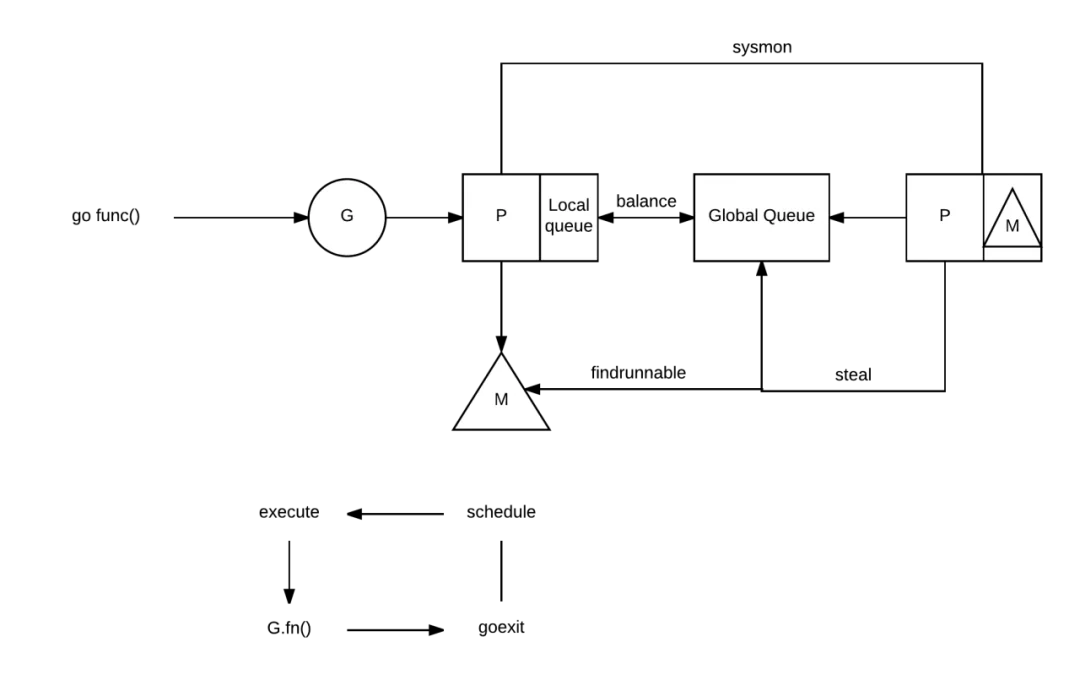

调度器的工作是将一个 G(需要执行的代码)、一个 M(代码执行的地方)和一个 P(代码执行所需要的权限和资源)结合起来。

堆本节主要通过阅读runtime源码来认识这三个组件到底长的是什么样子,以此加深对 GPM 的理解。go version go1.15.6

src/runtime/HACKING.mdruntimesrc/runtime/runtime2.gogoroutine协程gsrc/runtime/runtime2.goggGoroutine 字段非常的多,我们这里分段来理解

type g struct {

// Stack parameters.

// stack describes the actual stack memory: [stack.lo, stack.hi).

// stackguard0 is the stack pointer compared in the Go stack growth prologue.

// It is stack.lo+StackGuard normally, but can be StackPreempt to trigger a preemption.

// stackguard1 is the stack pointer compared in the C stack growth prologue.

// It is stack.lo+StackGuard on g0 and gsignal stacks.

// It is ~0 on other goroutine stacks, to trigger a call to morestackc (and crash).

stack stack // offset known to runtime/cgo

// 检查栈空间是否足够的值, 低于这个值会扩张栈, 0是go代码使用的

stackguard0 uintptr // offset known to liblink

// 检查栈空间是否足够的值, 低于这个值会扩张栈, 1是原生代码使用的

stackguard1 uintptr // offset known to liblink

}

stackGoroutine[stack.lo, stack.hi)// Stack describes a Go execution stack.

// The bounds of the stack are exactly [lo, hi),

// with no implicit data structures on either side.

// 描述go执行栈

// 栈边界为[lo, hi),左包含可不包含,即 lo≤stack<hi

// 两边都没有隐含的数据结构。

type stack struct {

lo uintptr // 该协程拥有的栈低位

hi uintptr // 该协程拥有的栈高位

}

stackguard0 stackguard1stackguard0StackPreemptgstackguard0stackguard016SP(stack pointer)stack.lo+StackGuardruntime·morestack_noctxt()关于对 Stack 的理解可参考这篇文章

type g struct {

preempt bool // preemption signal, duplicates stackguard0 = stackpreempt

preemptStop bool // transition to _Gpreempted on preemption; otherwise, just deschedule

preemptShrink bool // shrink stack at synchronous safe point

}

preemptpreemptStoppreemptShrinktype g struct {

_panic *_panic // innermost panic - offset known to liblink

_defer *_defer // innermost defer

}

_panic_defertype g struct {

m *m // current m; offset known to arm liblink

sched gobuf

goid int64

}

mschedgoidgobuf 结构体

type gobuf struct {

// The offsets of sp, pc, and g are known to (hard-coded in) libmach.

// 寄存器 sp,pc和g的偏移量,硬编码在libmach

//

// ctxt is unusual with respect to GC: it may be a

// heap-allocated funcval, so GC needs to track it, but it

// needs to be set and cleared from assembly, where it's

// difficult to have write barriers. However, ctxt is really a

// saved, live register, and we only ever exchange it between

// the real register and the gobuf. Hence, we treat it as a

// root during stack scanning, which means assembly that saves

// and restores it doesn't need write barriers. It's still

// typed as a pointer so that any other writes from Go get

// write barriers.

sp uintptr

pc uintptr

g guintptr

ctxt unsafe.Pointer

ret sys.Uintreg

lr uintptr

bp uintptr // for GOEXPERIMENT=framepointer

}

sppcgobufsppcgctxt写屏障write barriersggobufretbpgobufGoroutine 的状态有以下几种(源码)

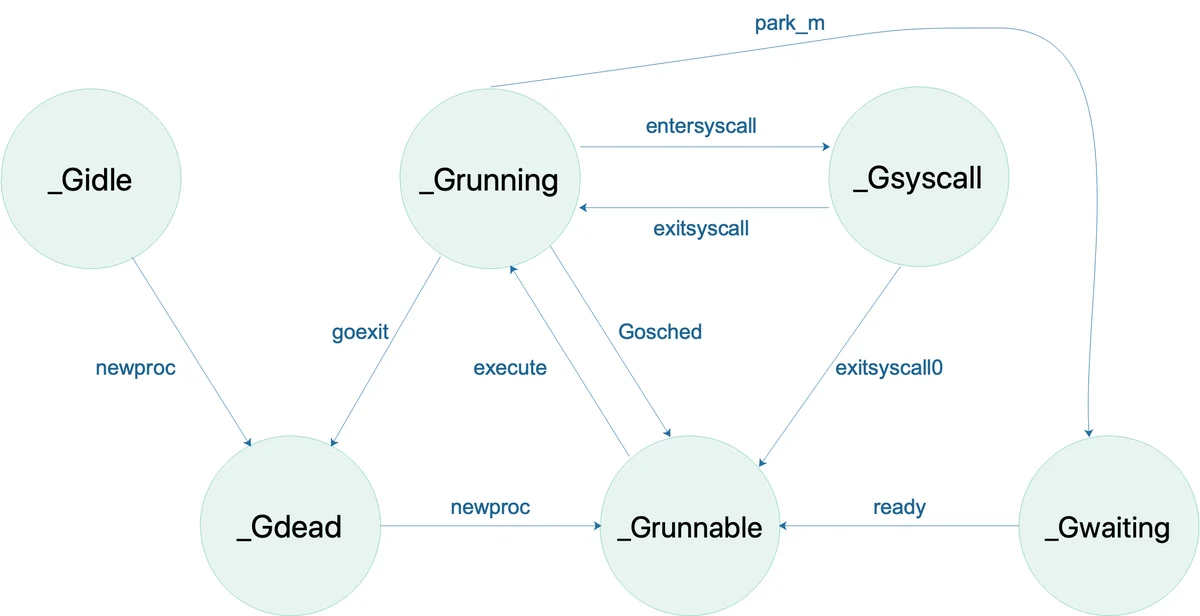

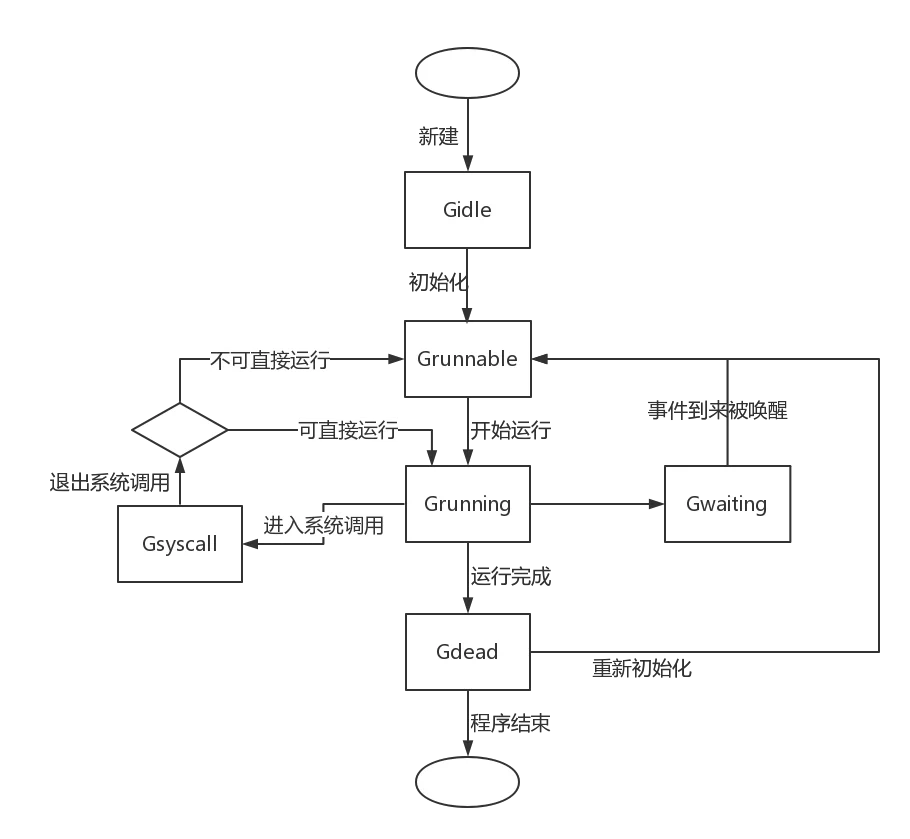

_Gidle_Grunnable_Grunning_Gsyscall_Gwaiting_Gmoribund_unused_Gdead_Genqueue_unused_Gcopystack_Gpreempted_Gscan_Gmoribund_unusedgdb_Gscan_GrunningGC_Gscan_Gscanrunnable_Gscanrunning_Gscansyscall_Gscanwaiting_Gscanpreempted可以看到除了上面提到的两个未使用的状态外一共有14种状态值。许多状态之间是可以进行改变的。如下图所示

type g strcut {

syscallsp uintptr // if status==Gsyscall, syscallsp = sched.sp to use during gc

syscallpc uintptr // if status==Gsyscall, syscallpc = sched.pc to use during gc

stktopsp uintptr // expected sp at top of stack, to check in traceback

param unsafe.Pointer // passed parameter on wakeup

atomicstatus uint32

stackLock uint32 // sigprof/scang lock; TODO: fold in to atomicstatus

}

atomicstatussyscallspGsyscallsched.spsyscallpcGSyscallsched.pcstktopspparamready()stackLocktype g struct {

waitsince int64 // approx time when the g become blocked

waitreason waitReason // if status==Gwaiting

}

waitsincewaitreasontype g struct {

// asyncSafePoint is set if g is stopped at an asynchronous

// safe point. This means there are frames on the stack

// without precise pointer information.

asyncSafePoint bool

paniconfault bool // panic (instead of crash) on unexpected fault address

gcscandone bool // g has scanned stack; protected by _Gscan bit in status

throwsplit bool // must not split stack

}

asyncSafePoint异步安全点truepaniconfaultgcscandone_Gscanthrowsplittype g struct {

// activeStackChans indicates that there are unlocked channels

// pointing into this goroutine's stack. If true, stack

// copying needs to acquire channel locks to protect these

// areas of the stack.

activeStackChans bool

// parkingOnChan indicates that the goroutine is about to

// park on a chansend or chanrecv. Used to signal an unsafe point

// for stack shrinking. It's a boolean value, but is updated atomically.

parkingOnChan uint8

}

activeStackChansparkingOnChantype g struct {

raceignore int8 // ignore race detection events

sysblocktraced bool // StartTrace has emitted EvGoInSyscall about this goroutine

sysexitticks int64 // cputicks when syscall has returned (for tracing)

traceseq uint64 // trace event sequencer

tracelastp puintptr // last P emitted an event for this goroutine

lockedm muintptr

sig uint32

writebuf []byte

sigcode0 uintptr

sigcode1 uintptr

sigpc uintptr

gopc uintptr // pc of go statement that created this goroutine

ancestors *[]ancestorInfo // ancestor information goroutine(s) that created this goroutine (only used if debug.tracebackancestors)

startpc uintptr // pc of goroutine function

racectx uintptr

waiting *sudog // sudog structures this g is waiting on (that have a valid elem ptr); in lock order

cgoCtxt []uintptr // cgo traceback context

labels unsafe.Pointer // profiler labels

timer *timer // cached timer for time.Sleep

selectDone uint32 // are we participating in a select and did someone win the race?

}

gopcstartpcwaitingtimer从字段命名来看,许多字段都与trace 有关,不清楚什么意思

type g struct {

// Per-G GC state

// gcAssistBytes is this G's GC assist credit in terms of

// bytes allocated. If this is positive, then the G has credit

// to allocate gcAssistBytes bytes without assisting. If this

// is negative, then the G must correct this by performing

// scan work. We track this in bytes to make it fast to update

// and check for debt in the malloc hot path. The assist ratio

// determines how this corresponds to scan work debt.

gcAssistBytes int64

}

gcAssistBytesgcAssistBytesruntime.mallocgc总结

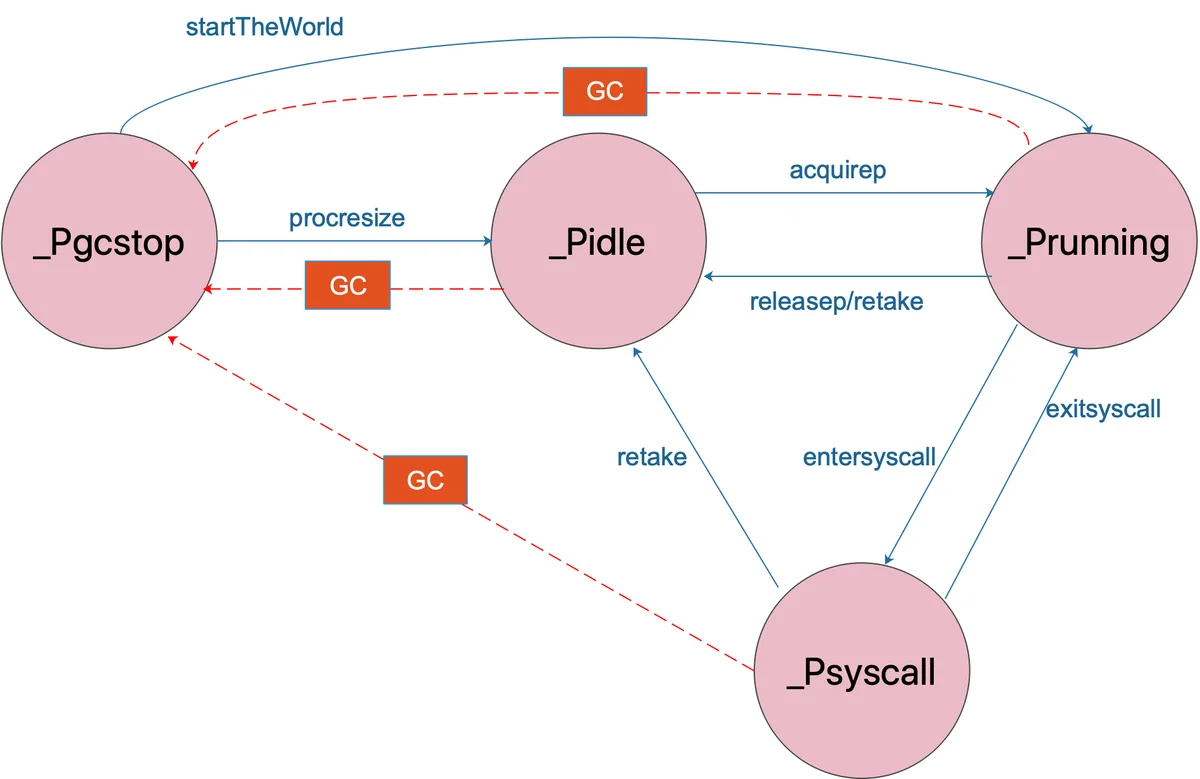

atomicstatusatomicstatusschedgobufpreemptpreemptStoppremptShrinkgoiddeferpanicwaitsincewaitreasongcscandoneatomicstatus_Gscan_GrunningpP 的数量决定了系统内最大可并行的 G 的数量(前提:物理 CPU 核数 >= P 的数量)。

P 的数量由用户设置的 GoMAXPROCS 决定,但是不论 GoMAXPROCS 设置为多大,P 的数量最大为 256。

P的数据结构也有几十个字段,我们还是分开来理解

type p struct {

id int32

status uint32 // one of pidle/prunning/...

link puintptr

schedtick uint32 // incremented on every scheduler call

syscalltick uint32 // incremented on every system call

sysmontick sysmontick // last tick observed by sysmon

}

idstatuslinkschedticksyscallticksysmontick对于P的状态有五种:

_Pidle_Prunning_Pidle_Psyscall_Pgstop_Psyscall_Pgcstop_Pdeadtype p struct {

m muintptr // back-link to associated m (nil if idle)

mcache *mcache

pcache pageCache

raceprocctx uintptr

}

m_Pidlemcachepcacheraceprocctxmcachepcachemcache是为了当G与P关联后,执行go code时,会为一些小对象(<32K)分配内存,这时直接从P.mcache 申请,避免直接从os申请,这样就允许多个P并发执行,减少申请内存的锁粒度,参考这里。

type p struct {

deferpool [5][]*_defer // pool of available defer structs of different sizes (see panic.go)

deferpoolbuf [5][32]*_defer

}

deferpooldeferpoolbuf这两个字段是与 defer 相关

type p struct {

// Cache of goroutine ids, amortizes accesses to runtime·sched.goidgen.

goidcache uint64

goidcacheend uint64

}

goidcachegoidcacheend两个都是 goroutine ids 的缓存。

type p struct {

// Queue of runnable goroutines. Accessed without lock.

runqhead uint32

runqtail uint32

runq [256]guintptr

// runnext, if non-nil, is a runnable G that was ready'd by

// the current G and should be run next instead of what's in

// runq if there's time remaining in the running G's time

// slice. It will inherit the time left in the current time

// slice. If a set of goroutines is locked in a

// communicate-and-wait pattern, this schedules that set as a

// unit and eliminates the (potentially large) scheduling

// latency that otherwise arises from adding the ready'd

// goroutines to the end of the run queue.

runnext guintptr

}

runqheadrunqtailrunqrunqrunnextnilnilrunqrunqPrunnextIf a set of goroutines is locked in a communicate-and-wait pattern, this schedules that set as a

// unit and eliminates the (potentially large) scheduling

// latency that otherwise arises from adding the ready’d goroutines to the end of the run queue.

256type p struct {

// Available G's (status == Gdead)

gFree struct {

gList

n int32

}

}

gFree.ntype p struct {

sudogcache []*sudog

sudogbuf [128]*sudog

}

sudogcachesudogbuf与*sudog 相关,可以看出与goroutine相关。不清楚这两个字段与上面的 runq 作用是什么。

type p strcut {

// Cache of mspan objects from the heap.

mspancache struct {

// We need an explicit length here because this field is used

// in allocation codepaths where write barriers are not allowed,

// and eliminating the write barrier/keeping it eliminated from

// slice updates is tricky, moreso than just managing the length

// ourselves.

len int

buf [128]*mspan

}

}

mspancachetype p struct {

tracebuf traceBufPtr

// traceSweep indicates the sweep events should be traced.

// This is used to defer the sweep start event until a span

// has actually been swept.

traceSweep bool

// traceSwept and traceReclaimed track the number of bytes

// swept and reclaimed by sweeping in the current sweep loop.

traceSwept, traceReclaimed uintptr

}

与trace相关

type p struct {

palloc persistentAlloc // per-P to avoid mutex

_ uint32 // Alignment for atomic fields below

// The when field of the first entry on the timer heap.

// This is updated using atomic functions.

// This is 0 if the timer heap is empty.

timer0When uint64

}

palloc_timer0Whentype p struct {

// Per-P GC state

gcAssistTime int64 // Nanoseconds in assistAlloc

gcFractionalMarkTime int64 // Nanoseconds in fractional mark worker (atomic)

gcBgMarkWorker guintptr // (atomic)

gcMarkWorkerMode gcMarkWorkerMode

// gcMarkWorkerStartTime is the nanotime() at which this mark

// worker started.

gcMarkWorkerStartTime int64

// gcw is this P's GC work buffer cache. The work buffer is

// filled by write barriers, drained by mutator assists, and

// disposed on certain GC state transitions.

gcw gcWork

// wbBuf is this P's GC write barrier buffer.

//

// TODO: Consider caching this in the running G.

wbBuf wbBuf

runSafePointFn uint32 // if 1, run sched.safePointFn at next safe point

}

P 的 GC 状态

gcMarkWrokderStartTimegcwwbBufrunSafePointFnsched.safePointFn各种状态的切换图

type p strcut {

// Lock for timers. We normally access the timers while running

// on this P, but the scheduler can also do it from a different P.

timersLock mutex

// Actions to take at some time. This is used to implement the

// standard library's time package.

// Must hold timersLock to access.

timers []*timer

// Number of timers in P's heap.

// Modified using atomic instructions.

numTimers uint32

// Number of timerModifiedEarlier timers on P's heap.

// This should only be modified while holding timersLock,

// or while the timer status is in a transient state

// such as timerModifying.

adjustTimers uint32

// Number of timerDeleted timers in P's heap.

// Modified using atomic instructions.

deletedTimers uint32

// Race context used while executing timer functions.

timerRaceCtx uintptr

}

timerLocktimersnumTimersadjustTimerstimerModifiedEarlierdeletedTimersdeletedTimerstimerRaceCtxtimer0WhentimerModifiedEarliesttimersLocktimersnumTimersadjustTimersdeletedTimerstimerDeletedtimerModifiedEarliertype p struct {

// preempt is set to indicate that this P should be enter the

// scheduler ASAP (regardless of what G is running on it).

preempt bool

pad cpu.CacheLinePad

}

preemptpad总结

_Pidle_Prunning_Psyscall_Pgcstop_Pdead调度次数系统调用schedticksyscallticknilmrunqrunnablerunnextgoidcachetimersmspanpreemptM 是指OS 内核线程,代表着真正执行计算的资源,在绑定有效的 P 后,进入 schedule 循环;而 schedule 循环的机制大致是从 Global 队列、P 的 Local 队列以及 wait 队列中获取。

m10000切记:M 并不保留 G 状态,这是 G 可以跨 M 调度的基础。

下面对 m 的结构体做下介绍

type m struct {

g0 *g // goroutine with scheduling stack

morebuf gobuf // gobuf arg to morestack

divmod uint32 // div/mod denominator for arm - known to liblink

}

g0morebufmorestackdivmodtype m struct {

// Fields not known to debuggers.

procid uint64 // for debuggers, but offset not hard-coded

gsignal *g // signal-handling g

goSigStack gsignalStack // Go-allocated signal handling stack

sigmask sigset // storage for saved signal mask

tls [6]uintptr // thread-local storage (for x86 extern register)

mstartfn func()

curg *g // current running goroutine

caughtsig guintptr // goroutine running during fatal signal

p puintptr // attached p for executing go code (nil if not executing go code)

nextp puintptr

oldp puintptr // the p that was attached before executing a syscall

id int64

}

procidgsignalgoSigStacksigmasksigsettlsmstartfncurgcaughtsigpnilnextpoldpidcaughtsigcurgcurgpnextpoldptype m struct {

mallocing int32

throwing int32

preemptoff string // if != "", keep curg running on this m

locks int32

dying int32

profilehz int32

}

throwingthrowpreemptoff其它几个字段未知

type m struct {

spinning bool // m is out of work and is actively looking for work

blocked bool // m is blocked on a note

newSigstack bool // minit on C thread called sigaltstack

printlock int8

incgo bool // m is executing a cgo call

freeWait uint32 // if == 0, safe to free g0 and delete m (atomic)

fastrand [2]uint32

needextram bool

traceback uint8

}

spinningblockednotenewSigstackprintlockincgofreeWaitfastrandneedextramtracebackspinningnote 的数据结构为

// sleep and wakeup on one-time events.

// before any calls to notesleep or notewakeup,

// must call noteclear to initialize the Note.

// then, exactly one thread can call notesleep

// and exactly one thread can call notewakeup (once).

// once notewakeup has been called, the notesleep

// will return. future notesleep will return immediately.

// subsequent noteclear must be called only after

// previous notesleep has returned, e.g. it's disallowed

// to call noteclear straight after notewakeup.

// 一次性事件中的休眠和唤醒

// 对于任何调用 notesleep 或 notwakeup 之前,必须调用 noteclear 进行初始化操作。

// 那么,一个线程调用 notesleep, 一个线程调用 notewakup(只能一次)。

// 当 notewakeup 被调用后,notesleep 还未返回,需要过一段时间 notesleep 才能调用完成。在继续调用 noteclear 之前,必须等待当前一个 notesleep 返回后才可以,

// 不允许在 notewakeup 后直接调用 noteclear。

//

// notetsleep is like notesleep but wakes up after

// a given number of nanoseconds even if the event

// has not yet happened. if a goroutine uses notetsleep to

// wake up early, it must wait to call noteclear until it

// can be sure that no other goroutine is calling

// notewakeup.

// notetsleep 类似 notesleep, 但唤醒后会返回一个纳秒数值(如果事件正好还不没有发生)

// 如果一个 goroutine 提前使用了 notetsleep 唤醒,它必须等待调用完 noteclear,直到确认没有其它goroutine调用 notewakeup

//

// notesleep/notetsleep are generally called on g0,

// notetsleepg is similar to notetsleep but is called on user g.

// notesleep/notetsleep 通常在 g0 上调用, notetsleepg类似于notetsleep,但在用户g上调用(可能指的用户态的G)

type note struct {

// Futex-based impl treats it as uint32 key,

// while sema-based impl as M* waitm.

// Used to be a union, but unions break precise GC.

key uintptr

}

notenotesleepnotewakupnotecleartype m struct {

ncgocall uint64 // number of cgo calls in total

ncgo int32 // number of cgo calls currently in progress

cgoCallersUse uint32 // if non-zero, cgoCallers in use temporarily

cgoCallers *cgoCallers // cgo traceback if crashing in cgo call

}

ncgocallncgocgoCallersUsecgoCaller这四个字段主要与cgo相关。

type m struct {

park note

alllink *m // on allm

schedlink muintptr

lockedg guintptr

createstack [32]uintptr // stack that created this thread.

lockedExt uint32 // tracking for external LockOSThread

lockedInt uint32 // tracking for internal lockOSThread

nextwaitm muintptr // next m waiting for lock

}

parknotealllinkschedlinklockedgg.lockedmcreatestatcklockedExtlockedIntnextwaitmtype m struct {

waitunlockf func(*g, unsafe.Pointer) bool

waitlock unsafe.Pointer

waittraceev byte

waittraceskip int

startingtrace bool

syscalltick uint32

freelink *m // on sched.freem

}

waitunlockfwaitlockwaittraceevwaittraceskipstartingtracesyscalltickfreelinksched.freemwaitlockfwaitlockwaittraceevwaittraceskipgppark()startingtracefreelinktype m strcut {

// these are here because they are too large to be on the stack

// of low-level NOSPLIT functions.

libcall libcall

libcallpc uintptr // for cpu profiler

libcallsp uintptr

libcallg guintptr

syscall libcall // stores syscall parameters on windows

vdsoSP uintptr // SP for traceback while in VDSO call (0 if not in call)

vdsoPC uintptr // PC for traceback while in VDSO call

}

前五个字段可能与库调用相关,它们之所以出现在这里,是因为它们太大了,不可能出现在低级NOSPLIT函数的堆栈中。

vdsoSPvdsoPCVDSOtype m struct {

// preemptGen counts the number of completed preemption

// signals. This is used to detect when a preemption is

// requested, but fails. Accessed atomically.

preemptGen uint32

// Whether this is a pending preemption signal on this M.

// Accessed atomically.

signalPending uint32

dlogPerM

mOS

// Up to 10 locks held by this m, maintained by the lock ranking code.

locksHeldLen int

locksHeld [10]heldLockInfo

}

preemptGensignalPendingdlogPerMmOSlocksHeldLenlocksHeldheldLockInfopreemptGensignalPendinglocksHeldLenlocksHeldranking codeheldLockInfo// heldLockInfo gives info on a held lock and the rank of that lock

// heldLockInfo 提供了一个锁的相关信息和锁的等级

type heldLockInfo struct {

lockAddr uintptr

rank lockRank

}

// src/runtime/lockrank.go type lockRank int

总结

curgnilnextpoldpnotego schedGo 调度器,它维护有存储 M 和 G 的队列以及调度器的一些状态信息等,全局调度时使用。

调度器循环的机制大致是从各种队列、P 的本地队列中获取 G,然后切换到 G 的执行栈上并执行 G 的函数,调用 Goexit 做清理工作并回到 M,如此反复。

下面我们再看一下它的数据结构

type schedt struct {

// accessed atomically. keep at top to ensure alignment on 32-bit systems.

// 原子访问, 最顶部,保证32位系统下的对齐

goidgen uint64

// 上次网络轮询的时间,如果当前正在轮询,则为0

lastpoll uint64 // time of last network poll, 0 if currently polling

// 当前轮询休眠的时间

pollUntil uint64 // time to which current poll is sleeping

lock mutex

// When increasing nmidle, nmidlelocked, nmsys, or nmfreed, be

// sure to call checkdead().

// 当增加 nmidle,nmidlelocked, nmsys或 nmfreed 的时候,一定要调用 checkdead()

// 空闲m等待队列

midle muintptr // idle m's waiting for work

// 空闲m的数量

nmidle int32 // number of idle m's waiting for work

// 等待工作的锁定m的数量

nmidlelocked int32 // number of locked m's waiting for work

// 已创建的m数和下一个m ID, 一个字段代表两个意义?

mnext int64 // number of m's that have been created and next M ID

// 允许的最大m数

maxmcount int32 // maximum number of m's allowed (or die)

// 死锁不计算系统m的数量

nmsys int32 // number of system m's not counted for deadlock

// 累计已释放m的数量

nmfreed int64 // cumulative number of freed m's

// 系统goroutins的数量,原子更新

ngsys uint32 // number of system goroutines; updated atomically

// 空闲p

pidle puintptr // idle p's

npidle uint32

// 自旋, 查看proc.go 文件的 "Worker thread parking/unparking" 注释

nmspinning uint32 // See "Worker thread parking/unparking" comment in proc.go.

// Global runnable queue.

// 全局运行队列信息, gQueue 是一个通过 g.schedlink 链接的双向队列

runq gQueue

// 队列大小

runqsize int32

// disable controls selective disabling of the scheduler.

//

// Use schedEnableUser to control this.

//

// disable is protected by sched.lock.

disable struct {

// user disables scheduling of user goroutines.

user bool

runnable gQueue // pending runnable Gs

n int32 // length of runnable

}

// Global cache of dead G's.

gFree struct {

lock mutex

stack gList // Gs with stacks

noStack gList // Gs without stacks

n int32

}

// Central cache of sudog structs.

sudoglock mutex

sudogcache *sudog

// Central pool of available defer structs of different sizes.

deferlock mutex

deferpool [5]*_defer

// freem is the list of m's waiting to be freed when their

// m.exited is set. Linked through m.freelink.

// freem 是当 他们的 m.exited 被设置时的等待被释放m列表,通过 m.freelink 链接

freem *m

// gc正在等待运行

gcwaiting uint32 // gc is waiting to run

stopwait int32

stopnote note

sysmonwait uint32

sysmonnote note

// safepointFn should be called on each P at the next GC

// safepoint if p.runSafePointFn is set.

// 如果 p.runSafePointFn 设置的话, safeopintFn 将在每个p下次 GC safepoint 时被调用

safePointFn func(*p)

safePointWait int32

safePointNote note

profilehz int32 // cpu profiling rate

// 对gomaxprocs的最后更改时间

procresizetime int64 // nanotime() of last change to gomaxprocs

totaltime int64 // ∫gomaxprocs dt up to procresizetime

// sysmonlock protects sysmon's actions on the runtime.

//

// Acquire and hold this mutex to block sysmon from interacting

// with the rest of the runtime.

// 在运行时,sysmonlock保护 sysmon 的运行

sysmonlock mutex

}

type schedt struct {

// accessed atomically. keep at top to ensure alignment on 32-bit systems.

// 原子访问, 最顶部位置,保证32位系统下的对齐

goidgen uint64

// 上次网络轮询的时间,如果当前正在轮询,则为0

lastpoll uint64 // time of last network poll, 0 if currently polling

// 当前轮询休眠的时间

pollUntil uint64 // time to which current poll is sleeping

lock mutex

}

goidgenlastpollpollUntillocktype schedt struct {

// When increasing nmidle, nmidlelocked, nmsys, or nmfreed, be

// sure to call checkdead().

// 当增加 nmidle,nmidlelocked, nmsys或 nmfreed 的时候,一定要调用 checkdead() 函数

// 空闲m等待时间

midle muintptr // idle m's waiting for work

// 空闲m的数量

nmidle int32 // number of idle m's waiting for work

// 等待工作的锁定m的数量

nmidlelocked int32 // number of locked m's waiting for work

// 已创建的m数和下一个m ID, 一个字段代表两个意义?

mnext int64 // number of m's that have been created and next M ID

// 允许的最大m数

maxmcount int32 // maximum number of m's allowed (or die)

// 死锁不计算系统m的数量

nmsys int32 // number of system m's not counted for deadlock

// 累计已释放m的数量

nmfreed int64 // cumulative number of freed m's

// 系统goroutins的数量,原子更新

ngsys uint32 // number of system goroutines; updated atomically

}

midlenmidlenmidlelockedmnextmaxmcountnmsysnmfreedngsysmidlenmidlenmidlelockedmaxmcounttype schedt struct {

// 空闲p

pidle puintptr // idle p's

npidle uint32

// 自旋, 查看proc.go 文件的 "Worker thread parking/unparking" 注释

nmspinning uint32 // See "Worker thread parking/unparking" comment in proc.go.

// Global runnable queue.

// 全局运行队列信息, gQueue 是一个通过 g.schedlink 链接的双向队列

runq gQueue

// 队列大小

runqsize int32

}

pidlenpidlenmspinningrunqrunqsizepidlenpidlenmspinningrunqmidlenmidlepidlenpidlenmspinningrunqtype schedt struct {

// disable controls selective disabling of the scheduler.

//

// Use schedEnableUser to control this.

//

// disable is protected by sched.lock.

disable struct {

// user disables scheduling of user goroutines.

user bool

runnable gQueue // pending runnable Gs

n int32 // length of runnable

}

// Global cache of dead G's.

gFree struct {

lock mutex

stack gList // Gs with stacks

noStack gList // Gs without stacks

n int32

}

}

disablesched.lockgFreepp.gFree_p_.gFree.n >= 64p.gFreegsched.gFreedisableschedEnableUsertype schedt struct {

// Central cache of sudog structs.

sudoglock mutex

sudogcache *sudog

// Central pool of available defer structs of different sizes.

deferlock mutex

deferpool [5]*_defer

// freem is the list of m's waiting to be freed when their

// m.exited is set. Linked through m.freelink.

// freem 是当 他们的 m.exited 被设置时的等待被释放m列表,通过 m.freelink 链接

freem *m

}

sudoglocksudogcachedeferlockdeferpoolfreemm.exited一个sudog缓存,另一个是defer pool,各自都有一个对应的锁。

type schedt strcut {

// gc正在等待运行

gcwaiting uint32 // gc is waiting to run

stopwait int32

stopnote note

sysmonwait uint32

sysmonnote note

}

gcwaitingtype schedt strcut {

// safepointFn should be called on each P at the next GC

// safepoint if p.runSafePointFn is set.

// 如果 p.runSafePointFn 设置的话, safeopintFn 将在每个p下次 GC safepoint 时被调用

safePointFn func(*p)

safePointWait int32

safePointNote note

profilehz int32 // cpu profiling rate

// 对gomaxprocs的最后更改时间

procresizetime int64 // nanotime() of last change to gomaxprocs

totaltime int64 // ∫gomaxprocs dt up to procresizetime

// sysmonlock protects sysmon's actions on the runtime.

//

// Acquire and hold this mutex to block sysmon from interacting

// with the rest of the runtime.

// 在运行时,sysmonlock保护 sysmon 的运行

sysmonlock mutex

}

sysmonlock

总结

- 调度器会记录当前是否处于轮训状态以及轮训的时间

- 记录有M相关的信息,如当前空闲的M,如果有多个的话,也会记录个数,记录的还有已用过数量,当前有多少个正在spinning,最大允许的M数量。同时也会记录其中持有锁的数量

- 记录有P相关的信息,如当前空闲P,空闲的数量。

- 持有当前系统 groutine 数量

- 有一个G的全局运行队列及其队列大小

- 通过gFree 记录有多少个空闲的G,可以被重复利用

- sudog缓存 和 deferpool

- 都有一个全局锁(lock)和 sysmon (sysmonlock)锁及其它锁(sugoglock)

- 可以控制是否禁用用户gorutine的调度行为,字段 disable(调用 schedEnableUser)

如果想学习Golang 的内存管理,则推荐先看这一篇 https://blog.haohtml.com/archives/29385

参考